Creation and Information

With the advent of the Information Age, scientists have learned how to measure information quantitatively in units called bits. In principle, the quantitative information content of any object or event, be it artificial or natural in origin, can be determined. Objects that contain information in the form of languages or codes (such as DNA) are most amenable to information measurement. Recently, intelligent design theorist William Dembski has proposed a method for detecting a type of information called complex specified information or CSI. 1 Where CSI is detected, design is implicated. CSI makes design detectable and thus a part of empirical science.

Information must meet three criteria to be classified as CSI and therefore implicate intelligent design. First, the object or event must be contingent. An object or event is contingent if it does not have to happen. Throwing a normal die may result with the number 3 being on top, but it does not have to turn out that way; obtaining a result of 3 is therefore a contingent event. If the die had only 3s on its six sides, obtaining a result of 3 would not be contingent. Non-contingent events are determined by necessity or law instead of chance or design. Next, the object or event must be assessed for its complexity or likelihood of occurrence. The complexity of an event or object increases as its chance likelihood decreases. Events that are likely and simple can be attributed to chance alone without invoking law or design. If an object or event is sufficiently unlikely to be considered

complex (i.e. if it contains >500 bits, see below), then one must determine if the information in the object is specified. An object or event is specified if its information is intelligible or recognizable as an independent pattern. For example, the phrase “ME THINKS IT IS LIKE A WEASEL” is specified because the string of letters and spaces is intelligible and recognized as a sentence in the English language. An equally long but random string of letters such as “EZC WJISMO QUNEE NHYXA IHSMLW” is as complex as the previous phrase but not specified and hence attributable to chance. Dembski calls his contingency, complexity, specification criteria for detecting design the Explanatory Filter.

The minimum amount of information required to indicate design is called the Universal Complexity Bound (UCB) and is equivalent to 500 bits of information. This number comes from a determination of the maximum number of arrangements of matter that could have ever been generated during the history of the universe by chance processes. Dembski calculated the UCB by multiplying the number of particles in the universe (1080) times the age of the universe (1025 seconds) 2 times the speed of the fastest possible process (1045 events/second). This product, 10150, is equivalent to 500 bits of information. 3 Hence a contingent event can be (1) simple and unspecified, or (2) complex and unspecified, or (3) specified but not complex, or (4) complex and specified. Only complex specified information exceeding the UCB indicates design. As it turns out, the world of biochemistry is replete with molecules that exhibit CSI.

But naturalistic evolutionists claim that CSI can be generated by natural processes. Natural processes can be categorized at probabilistic (chance), deterministic (law), or a combination of law and chance (stochastic). In biology, the stochastic process of mutation acted upon by natural selection presumably accounts for macroevolution (bacteria to humans). Dembski argues that chance is too “dumb” to generate complexity (in a universe of finite age). He shows that law can only transmit information or shuffle it around but cannot generate new information. Stochastic processes may generate simple specified information but not CSI. Hence, the Neo-Darwinian mechanism of mutation-selection is powerless to generate new CSI.

Evolutionists believe evolutionary algorithms modeled in silico have demonstrated stochastic processes possess information generating capabilities. One such algorithm started with a random string of letters such as “EZC WJISMO QUNEE NHYXA IHSMLW” and converted it into the target phrase “ME THINKS IT IS LIKE A WEASEL” in a just few dozen steps. 4 At each step, all the characters that were like those in the target phrase were retained but all others were randomly changed. The new string of characters was again compared to the target phrase and all correct characters were retained. The process was repeated until the target phrase emerged. The reason that this evolutionary algorithm fails to generate new information is that the information it allegedly generates is actually built into the algorithm itself. 5 The target phrase was already a part of the algorithm. Instead of creating new information, this algorithm merely shifted the information it already contained into the target phrase; nothing new was generated at all. Hence, for an evolutionary algorithm to be able to “generate information,” it must be supplied the information from an outside source. The No Free Lunch theorems 6 state that evolutionary algorithms can only “generate” as much information as they already possess. In nature, natural selection can retain mutations that add survival/reproductive success value but is incapable of “guiding” the formation of new CSI in the DNA.

Information generation is constrained by The Law of Conservation of Information (LCI) which states information can b e transmitted or degraded but not created by chance and natural processes (law). 7 Consequently, the information content of a closed system remains the same or decreases with time. The CSI of a closed system of natural causes (e.g. the universe) was either there eternally or added from an external source (i.e. the system was not always closed). Any closed system of natural causes of a finite duration received whatever CSI it contains before it became a closed system. Since the universe is a closed system of natural causes and of finite duration, the information seen in the biological world must have been present from the beginning and/or added at various times during its existence. Due to the LCI, evolutionists must explain how the information in DNA was present at the time of the Big Bang, was retained for billions of years in the matter of the cosmos, and was finally translated into the genetic code. Clearly, the origin and mechanism of translation of information in such a naturalistic scenario are enigmatic. One evolutionist who has acknowledged this dilemma is information theory expert and evolutionist Hubert P. Yockey. According to Yockey,

The reason that a scientific explanation for the origin of life has not been found may be that the problem is intractable or indeterminate and beyond human reasoning powers. …life is consistent with the laws of physics and chemistry but not derivable from them. We must…take life as an axiom…the time of the molecular biologist is better spent on understanding life as it is…having accepted the inexplicable axioms of these subjects. 8

More evidence for design in nature comes from irreducibly complex biological systems. 9 The definition of irreducible complexity has been given by Dembski:

A system performing a given basic function is irreducibly complex if it includes a set of well matched, mutually interacting, nonarbitrarily individuated parts such that each part in the set is indispensable to maintaining the system’s basic, and therefore original, function. 10

An irreducibly complex system must have all its parts and each of its parts must be tailored to its function, or the system will not work. One biological system that is irreducibly complex is the bacterial flagellum, a rotating whip-like structure which propels bacteria through water. Laboratory experiments have demonstrated that the flagellum stops working upon removal of any of its parts. 11 The problem this poses for evolution is that a modified or incomplete version of the flagellum would be non-functional, would not add survival/reproductive success value, and therefore would not be retained by natural selection. In other words, if the flagellum will only work when fabricated correctly and with all its parts, what could have been its evolutionary precursor? Any changes would render the flagellum inoperative and of no value to the organism. Evolutionists claim that parts of the flagellum are similar to other molecular machines in the cell and could have been co-opted from those sources to form the flagellum thereby negating the need for non-functional precursors of the flagellum. However, just having the parts of the flagellum is not sufficient because the parts must be assembled in a particular order and at the right time by other molecular machines which themselves are manufactured as needed. Hence, the system that assembles the flagellum is itself irreducibly complex and necessary for the construction of the flagellum. For this reason, the theory of co-optation does not work. 12

Another example of irreducible complexity is the system that synthesizes proteins. 13 The information in the DNA is read by an enzyme (RNA polymerase) that synthesizes a strand of messenger RNA. The mRNA is then moved out of the nucleus to a molecular factory called the ribosome. The ribosome is an assembly of enzymes (proteins) that translates the code in mRNA into a sequence of amino acids to form a protein. The enzyme RNA polymerase is made in this way. DNA is also replicated and repaired by proteins that are enzymes. Notice that all of the parts of the system are required for the system’s function. The DNA holds the information required to make the correct proteins and the proteins are required to read the information in the DNA and replicate the DNA. This situation speaks to the origin of life problem. Evolutionists have to explain how a simpler system, one which could have arisen by chance and the laws of chemistry, could have evolved into DNA/protein system we observe now. A self-replicating molecule that both stores information and acts as a chemical catalyst would help fill this void. So far, no one has identified a biochemically relevant self-replicating molecule let alone how it might have evolved into the complex DNA/protein system of the present world.

Evolutionists maintain that the observable process of microevolutionary change (variation within kinds) can be extrapolated to the inferred process of macroevolutionary change (molecules to man evolution). They point to observable examples of the action of natural selection to produce adaptations in organisms such as Darwin’s finches, antibiotic resistant bacteria, industrial melanism in peppered moths, and fruit flies. 14

In each case, however, there is no evidence of an increase in the information of the genome. 15 Indeed, in the case of bacterial resistance, there is evidence that the information content of the genome can decrease. 16 For example, single nucleotide mutations in some bacteria are known to impart resistance to the antibiotic streptomycin. This resistance involves the bacterial ribosomes. Ribosomes are primarily an assemblage of proteins, some of which act as chemical catalysts. Enzymes (catalytic proteins) usually only perform one type of reaction at a specific reactive site with one specific molecule. A molecule is bound to the reactive site, chemically transformed, and released. This specificity is caused by the shape of the protein at the reactive site that, in turn, ultimately comes from the protein’s amino acid sequence. Hence, there is a “lock and key” mechanism where only one molecule can act as the key that fits into the protein’s reactive site (lock). There are other sites in enzymes (not the reactive site) where specific molecules can bind (without reacting) that can change the overall shape of the protein and the reactive site and, hence, the enzyme’s reactivity. Nature uses these other sites to regulate enzymatic activity; some molecules that fit these sites slow down or turn off the enzyme while others accelerate its reactions. An abnormal change in the shape of the reactive site can lead to a loss of enzymatic specificity; the lock may now allow more than one key. Some mutations in the DNA that codes for the proteins of the ribosome can lead to changes in the amino acid sequences which change the shape and hence specificity of the reactive sites and the other binding sites.

Streptomycin attaches itself to bacterial ribosomes at a specific protein site causing a change in shape of the reactive site thereby interfering with protein production and causing the wrong proteins to be made; the ability of the ribosome to accurately translate the information coded in the mRNA to specific amino acids has been impaired. These bacteria die because their ribosomes can’t correctly make the necessary proteins. Ribosomes in mammals don’t have this site of attachment and so no interference with protein production occurs. Ribosomes in mammals don’t have this site of attachment and so streptomycin does not interfere with protein production in mammals. Mutations change the site of attachment on the bacterial ribosomes so that the drug no longer binds thereby imparting antibiotic resistance. Several possible mutations can lead to resistance. However, this adaptation is associated with an information loss because the specificity of the ribosome is decreased; the speed and accuracy of protein production is reduced in the mutants. It is more correct to say the bacteria lose sensitivity to the drug than to say it gains resistance. Hence, antibiotic resistance in some bacteria to streptomycin is an example of an adaptive mutation purchased with a loss in information.

A recent review 17 of evolution experiments with microorganisms made several generalizations: (1) initially, populations adapt rapidly via beneficial mutations to new environments but the rate drops off quickly; (2) genetically identical organisms placed in separate but identical environments exhibit parallel molecular evolution, although the phenotypes may diverge; (3) most genes do not change even over thousands of generations; (4) adaptation to one environment may be associated with loss of fitness in another environment; and (5) in small populations, the rate of formation of adaptive mutations is outstripped by the formation of deleterious ones resulting in a decline in fitness of the population. These conclusions are completely consistent with the creationary position that microevolution involves no net gain in CSI. Indeed, observation (2) suggests that the new adaptive mutants either were initially present or resulted from genetic programming in the initial population; chance is not a good explanation here. Notice that even after thousands of generations (3), the microorganisms were still the same microorganisms; no macroevolutionary changes were observed.

There is evidence that organisms can rapidly change in phenotype (outward organism) to increase adaptation to a particular environment. Some changes have been observed that were so rapid that chance mutations cannot account for the adaptations (mutation rate was slower than changes in phenotype). Rapid adaptation can happen, however, if the organism is preprogrammed to turn on and off regulatory genes in response to environmental stimuli. For example, evidence that adaptive changes can be preprogrammed into the genome comes from experiments with guppies. 18 Two strains of guppies had different gestational patterns and predators. One strain of guppy matured late and had relatively few offspring. Its predator sought young guppies. The second strain matured early and had relatively many offspring. Its predator preferred mature guppies. The second strain was moved to an environment lacking the first strain and where the predator preferring young guppies resided. After just two years, only the first strain of guppies could be found. This rate of change suggests the adaptation was built in and not a result of the random mutation—natural selection mechanism. Hence, no new information was generated with the adaptation.

Evolutionist Richard Dawkins claims that the observable processes of gene duplication (where a daughter cell ends up with two copies of the DNA) and polyploidy (where genes replicate without cell division) create new genetic information. 19 However, having two copies of DNA, like having two copies of the Complete Works of Shakespeare, does not amount to having twice as much information. Information must be novel and complex to qualify as “new.”

Evolutionists had long held that non-protein coding DNA was the remnants of a random, unguided evolutionary process. Indeed, why would a creator make so much useless, nonsense DNA, they reasoned. Evidence is now mounting, however, that suggests “junk DNA” does have function after all. 20 Several functions of noncoding DNA are now known. Untranslated portions of mRNA serve as sites of attachment to ribosomes. Organisms with an increased genome size usually develop more slowly. Some species of salamanders with a larger than usual genome (contain more non-coding DNA) are better able to survive in cold environments due to a reduced metabolic rate. Introns (non protein coding DNA) apparently facilitate gene regulation and organization. Introns may guide the folding of DNA in the nucleus thereby ordering gene expression (creates an index) and hence development of an organism. Some introns catalyze their own removal during the RNA transcription process revealing a level of complexity previously unappreciated. Some introns are now thought to code for RNA that plays a role in ribosome production and regulation. Non-coding DNA may signal the expression of some genes and the repression of others. The length of the untranslated portion of mRNA can determine the RNA cytoplasmic half-life (how well it binds to the ribosome) and thus its rate of expression into proteins. Non-coding DNA on the ends of chromosomes help maintain integrity of the chromosomes and thereby perpetuate cell lines. Some non-coding DNA repairs breaks in broken DNA. There is evidence that some non-coding DNA sequences may help bacteria to adapt to otherwise lethal changes in their environment. Non-coding DNA may also be the genetic material used for microevoltionary changes. This would help explain how some organisms can have significant changes to their phenotype in a few generations, much faster than a mutation/selection mechanism could operate even if beneficial mutations were highly probable. The more we learn, the more DNA appears to be the product of design and not random processes.

In summary, design in nature is now empirically detectable as complex specified information (CSI) by the contingency, complexity, specification criteria where the complexity of an object or an event equals or exceeds 500 bits of information. Neither chance, law, or stochastic processes are able to generate new CSI. Evolutionary algorithms can shuffle information around but cannot generate new CSI. The Law of Conservation of Information requires that the information content of a closed system remain the same or decrease with time. Irreducibly complex biological systems could not have evolved since any precursor would have been non-functional and hence not selected. The evolutionary theory of cooptation of pre-existing parts to build novel structures fails to account for the evolution of the systems required to assemble the parts into the new structure. There are no known examples of microevolutionary adaptations which involve an increase in CSI, but there are examples where the CSI decreased; there is therefore no empirical justification for the extrapolation of microevolution to macroevolution. There is evidence that the phenotypes of organisms can change rapidly and these changes are triggered by environmental cues. The rate of these changes rule out random mutations as the cause but instead suggest the activation/deactivation of genes already present. Gene duplication is not the same as generating new CSI. So-called “junk DNA” increasingly appears to have function negating the argument that DNA could not have been designed. “The basic concepts with which science has operated these last several hundred years are no longer adequate, certainly not in an information age, certainly not in an age where design is empirically detectable. Science faces a crisis of basic concepts. The way out of this crisis is to expand science to include design. To reinstate design within science is to liberate science, freeing it from restrictions that were always arbitrary and now have become intolerable.” 21

Evidences for Creation over Evolution

Editor’s Note: The following is argument No. 18 of 21 arguments compiled by various persons who believe that true science and biblical Christianity go hand in hand. Earlier parts in this series covered arguments 1-17.

This was produced jointly by the Creation Research Society, St. Joseph, Missouri, and Skilton House Ministries, Philadelphia, Pennsylvania. Editors: Paul G. Humber and Glen W. Wolfrom. Contributors: Harry Akers, Robert Gentet, Ed Garrett, Lane Lester, Ron Pass, Dave Sack, Curt Sewell, Helen Setterfield, Doug Sharp, and Laurence Tisdall.

18. The presence of two different sexes (touched on in #2 above) is a puzzle evolutionists have yet to invent a satisfactory answer for. Single-celled organisms reproduce by dividing themselves or budding off of themselves. Each “daughter” is exactly like the “mother.” So where did the male/female difference come from? Genetically, this male/female difference helps control—usually by elimination—the presence of persistent mutations in a population—the very mutations needed for evolution to continue! So the advent of sexual reproduction evolutionarily is a real bugaboo for the evolutionists and something they have yet to satisfactorily explain. The Bible is clear that human beings were made male and female from the beginning and, although it does not explicitly state that about the animals, the fact that these animals are recognized as beasts and birds and fish from the beginning is a strong indication that they were also male and female from the beginning.

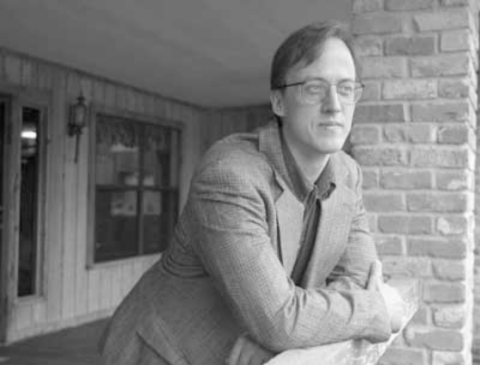

About William A. Dembski

A mathematician and a philosopher, William A. Dembski is associate research professor in the conceptual foundations of science at Baylor University and a senior fellow with Discovery Institute’s Center for Science and Culture in Seattle. He is also the executive director of the International Society for Complexity, Information, and Design (www.iscid.org). Dr. Dembski previously taught at Northwestern University, the University of Notre Dame, and the University of Dallas. He has done postdoctoral work in mathematics at MIT, in physics at the University of Chicago, and in computer science at Princeton University. A graduate of the University of Illinois at Chicago where he earned a B.A. in psychology, an M.S. in statistics, and a Ph.D. in philosophy, he also received a doctorate in mathematics from the University of Chicago in 1988 and a master of divinity degree from Princeton Theological Seminary in 1996. He has held National Science Foundation graduate and postdoctoral fellowships. Dr. Dembski has published articles in mathematics, philosophy, and theology journals and is the author/editor of seven books. In The Design Inference: Eliminating Chance Through Small Probabilities (Cambridge University Press, 1998), he examines the design argument in a post-Darwinian context and analyzes the connections linking chance, probability, and intelligent causation. The sequel to The Design Inference appeared with Rowman & Littlefield in 2002 and critiques Darwinian and other naturalistic accounts of evolution. It is titled No Free Lunch: Why Specified Complexity Cannot Be Purchased without Intelligence. Dr. Dembski is currently coediting a book with Michael Ruse for Cambridge University Press titled Debating Design: From Darwin to DNA. Another book, The Design Revolution: Answering the Toughest Questions about Intelligent Design will be forthcoming in the fall 2003 from InterVarsity Press. (taken from http://www.designinference.com/biosketch.htm and http://www.designinference.com/documents/CVpdf.may2003.pdf).

- 1Dembski, William. Intelligent Design. 1999, Intervarsity Press.

- 2Dembski accepts old earth/old universe ages.

- 3Information I = -log2p where p = probability of the event. Hence the UCB = -log210-150 = 500 bits.

- 4Dembski, William. No Free Lunch. 2002, Rowman and Littlefield, p. 180-184.

- 5Dembski. 2002, chapter 4.

- 6Dembski. 2002, p. 196, 203-204.

- 7Dembski. 1999, p.170.

- 8Yockey, Hurbert P. Information and Molecular Biology. 1992, Cambridge University Press, p. 290-291.

- 9Behe, Michael Darwin's Black Box: The Biochemical Challenge to Evolution. 1996. The Free Press.

- 10Dembski. 2002, p. 285.

- 11Behe, p. 69-73.

- 12Unlocking the Mystery of Life, a video produced in 2002 by Illustra Media. It is available at http://www.illustramedia.com .

- 13Dembski. 2002, p. 254-256.

- 14Wells, Jonathan. Icons of Evolution. 2000, Regnery.

- 15In the case of peppered moths it is likely that we don’t understand the cause of the observed variations. See reference 13 for details.

- 16Spetner, Lee Not By Chance. 1998, Judaica Press, p. 138-141.

- 17Elena, Santiago F., Lenski, Richard E. Evolution Experiments with Microorganisms: The Dynamics and Genetic Bases of Adaptation, Nature Reviews: Genetics. 2003, 4, 457-469.

- 18Spetner, p. 205-206.

- 19Rosenhouse, Jason. The Design Detectives. Skeptic, 2001, 8(4), p. 60.

- 20Standish, Timothy G. Rushing to Judgment: Functionality in Noncoding or “JUNK” DNA. Origins, 2002, 53: p. 7-30. Available online at http://www.grisda.org/origins/53007.pdf; Sarfati, Jonathan. Refuting Evolution 2 (Answers in Genesis, 2002), p. 122-125; Walkup, Linda K. CEN Technical Journal 2000, 14(2): p. 18-30; Hirotsune, Shinji et al. Nature, 2003, 423: p. 91-96.

- 21Dembski. 1999, p. 152.